I just watched OpenAI’s announcement about its upcoming o3 and o3-mini reasoning AI models, and they’ve reached an AI general intelligence milestone with this release.

The biggest news is that the o3 model took a major leap towards Artificial General Intelligence, or AGI, when it achieved human-level performance on a test where it had to solve new problems it had never encountered before.

AGI is a hypothetical type of AI that’s reached or surpassed human level cognitive abilities as well as the mental flexibility of the human brain to solve many different types of problems, with some researchers suggesting it could theoretically become sentient or self-aware. A scary take on this would be the Skynet AGI in the Terminator movies that wants to destroy all humans. Yikes.

On Friday, December 20th, OpenAI CEO Sam Altman and researchers Mark Chen and Hongyu Ren hosted a 22 minute livestream showcasing the new o3 models’ capabilities.

About five minutes into the OpenAI announcement, Altman and Chen welcomed Greg Kamradt, president of the ARC Prize Foundation, to talk about the o3 model’s AGI capabilities.

Kamradt announced that the o3 system scored 87.5% on the ARC-AGI benchmark test for general intelligence, way above the previous AI best score of 55%. Human performance for this test is around 85%, so the o3 model’s results have crossed into new territory in the ARC-AGI world, Kamradt said in the presentation.

“I need to fix my AI intuitions about what AI can actually do and what it’s actually capable of, especially in this o3 world,” he said.

ARC-AGI tests an AI system’s ability to figure out a previously unknown, or novel problem. In the example above, given by Kamradt during the OpenAI announcement, a human can easily figure out that a darker blue square is added next to the lighter blue squares to make a bigger square. This is the type of problem that’s been a real challenge for AI systems to figure out, until now.

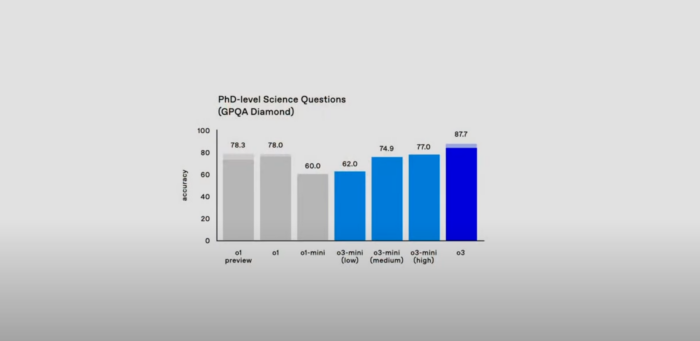

The o3 and o3-mini models showed impressive results in other areas as well. For example, they were able to surpass human PhD-level thinking about difficult science questions. The o3 model reached a score of 87.7%, whereas a human PhD-level expert might get a score of around 70% in their chosen field.

OpenAI’s o3 model also achieved an impressive score of 25.2% on EpochAI’s Frontier Math benchmark, which is considered to be the toughest mathematical benchmark, said OpenAI’s Chen in the presentation.

“This is a dataset that consists of novel, unpublished, and also very hard problems,” Chen said. “It would take professional mathematicians hours or even days to solve one of these problems.”

The big difference between o3 and o3-mini is that o3 is going to be the most powerful full version which is also the most expensive, while o3-mini is a more cost-effective model optimized for situations where the highest computational power isn’t necessary.

As for why OpenAI skipped straight from version o1 to o3, Altman said it was out of respect for their friends at Telefonica, which owns the O2 telecommunications brand, as well as OpenAI having a grand tradition of being “really, truly bad at names,” said Altman.

Coding like a pro

Are human coders about to be out of a job?

Some programmers are saying that nothing is going to change for them in the near future, but others are sounding the alarm about getting replaced by AI.

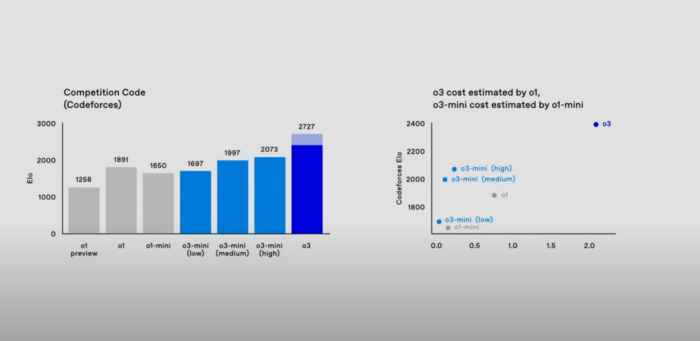

o3 just beat out 99% of competitive coders in a coding challenge. However, o3 also costs more than $1,000 to perform a single task at the highest compute setting.

So far, we’re going to need humans to understand what code we’re writing and to make decisions about how applications are going to be designed. But maybe not for long. Here’s a good take on how AI is going to affect programmers:

Revolutionizing industries

OpenAI will soon be releasing autonomous AI agents that can manage complex tasks without human intervention. Businesses large and small could benefit from AI performing jobs that only humans could do before.

The downside?

Besides smart AI being very energy intensive and expensive, many people could also be out of jobs as their industry is heavily disrupted by the use of increasingly capable AI models.

And there could be other unforeseen catastrophic effects we haven’t accounted for. Remember Terminator? Even today’s less capable AIs have shown the ability to be harmful or deceitful, and AI companies, including OpenAI, released those models anyway.

In this case, OpenAI seems like it’s taking safety seriously before o3 and o3-mini meet the public.

Safety researchers are currently being encouraged to sign up to test out the models before the public release, which is just around the corner. The more cost-effective 03-mini is slated for a late January release and the full 03 version will come out shortly after that.

What do you think about the future of AGI? Is AI going to take humanity into a bright and promising future, or will we all be hiding in ditches, machines guns in hand, hoping to preserve the small human population that we have left? Feel free to leave a comment and let us know what you think.

- OpenAI’s new reasoning AI model achieves human level results on intelligence test - January 2, 2025

- OpenAI’s new Point-E lets you generate 3D models with text - December 21, 2022

- Celebrity Cruises unveils virtual cruise experience - December 15, 2022